We are pleased to announce the 0.8.0 release of Alibi Detect, featuring new drift detection capabilities. The release features four new drift detectors ideal for monitoring model performance in the supervised setting where label feedback is available. These are the Cramér-von Mises and Online Cramér-von Mises detectors for continuous performance indicators, and the Fisher’s Exact Test and Online Fisher’s Exact Test detectors for binary performance indicators. Using the new detectors in this supervised setting allows us to detect harmful data drift in a principled manner.

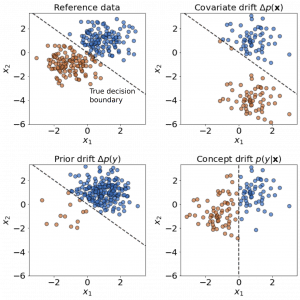

As discussed in What is Drift?, when monitoring drift a number of types of drift can occur:

-

- Covariate drift: Also referred to as input drift, this occurs when the distribution of the input data x has shifted.

- Prior drift: Also referred to as label drift, this occurs when the distribution of the outputs y has shifted.

- Concept drift: This occurs when the underlying process being modeled has changed.

In many cases, labels are not available in deployment and we are restricted to detecting covariate drift (or prior drift if we use model predictions as a proxy for the true labels). However, when labels are available, we can use the new detectors to monitor the performance of our model directly, and choose to only detect “malicious” drift that affects this performance. Malicious drift may be caused by any of the types of drift introduced above, including concept drift which can’t be detected in the unsupervised case.

A good question to ask at this point would be “why not just check for a change in the mean of the model’s accuracy or loss?”. The main answer to this is that simply monitoring the mean would not capture drift in higher order moments such as variance changes, which the CVM detector is able to detect. The new detectors also possess a number of other desirable features; they perform a statistical hypothesis test, so drift detection can be performed in a principled manner, with the user able to set a desired False Positive Rate (FPR). Additionally, the detectors have very few hyperparameters and make no assumptions regarding the distribution of the data, which is especially advantageous when the “data” represents a model’s accuracy or loss.

In many cases data arrives sequentially, and the user wishes to perform drift detection as the data arrives to ensure it is detected as soon as possible. The usual offline detectors are not applicable here since the process for computing their p-values is too expensive. Also, they don’t account for correlated test outcomes when using overlapping windows of test data, leading to miscalibrated detectors operating at an unknown FPR. Well-calibrated FPR’s are crucial for judging the significance of a drift detection. In the absence of calibration, drift detection can be useless since there is no way of knowing what fraction of detections are false positives.

To tackle this challenge the online detectors in Alibi Detect perform a threshold calibration step when they are initialized, so that they can operate rapidly when used sequentially on deployment data, with the FPR accurately targeted. For the unsupervised case the existing MMD and LSDD detectors in Alibi Detect leverage the calibration method introduced by Cobb et al. (2021) to allow covariate drift to be detected in an online manner, whilst the new CVM and FET detectors adapt ideas from this paper and this paper by Ross et al. to tackle the supervised case. The calibration process in the online CVM detector is moderately costly, so it is implemented in parallel using Numba to ensure rapid performance.

With the addition of the Cramér-von Mises and Fisher’s Exact Test detectors, we can monitor harmful data drift in a principled manner. The drift detectors in Alibi Detect now cover all types of drift; covariate drift, prior drift, and now concept drift. Additionally, nearly all data modalities are covered, from text or images to molecular graphs, both in online and offline settings. On top of that, various methods provide more granular feedback and show which features or instances are most responsible for the occurrence of drift. All drift detectors support both TensorFlow and PyTorch backends. Check the documentation and examples for more information!