In the realm of natural language processing (NLP), the advent of Large Language Models (LLMs) has revolutionized the way we approach various tasks, including automated data extraction. LLMs, such as GPT, showcase remarkable capabilities in understanding and generating human-like text. Entity or Data extraction, a crucial component of information retrieval, involves identifying and classifying entities such as names, locations, organizations, and more within a given text. Leveraging LLMs for data extraction enhances the accuracy and efficiency of this process. These models excel at contextual understanding, enabling them to discern subtle nuances and relationships between words, ultimately leading to more precise entity identification. Whether applied in the context of document analysis, social media monitoring, or data categorization, the utilization of LLMs for data extraction empowers NLP practitioners with a potent tool that navigates the intricacies of language with unprecedented finesse.

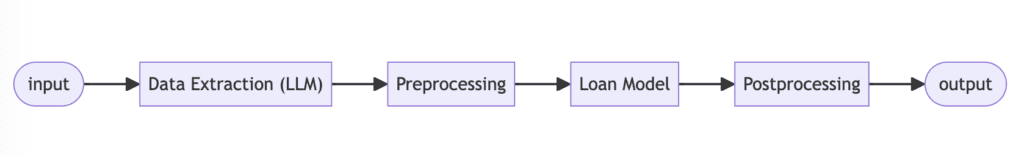

Entity or Data Extraction can be used as a preprocessing step performed on natural language prior to other downstream tasks. In this blog post, we’re going to showcase a flow that automates the extraction of data from a loan application using the Seldon Enterprise Platform. We’ll then use a seldon-core-v2 pipeline in order feed the extracted data into a model that decides whether to accept the loan application or not.

In order to do this we’re going to:

- Deploy an OpenAI LLM model that extracts the relevant features from the user written text for a loan application.

- Create and deploy a Seldon-Core-V2 pipeline that wires the automated data extraction step up to a deployed classifier model

So let’s get started!

Creating the Data-extractor Model

An amazing capacity of Large language Models is their capacity to be programmed to do arbitrary NLP tasks using prompting. In this section we’re going to deploy an enterprise platform LLM-runtime along with a prompt that will perform automated data extraction on user submitted text.

The enterprise platform OpenAI LLM Runtime is a runtime that’s been built to make it incredibly easy to use OpenAI’s large language models in the Seldon deployment ecosystem. We also provide a deepspeed runtime that allows you to easily deploy your own large language models instead of using OpenAIs models. All of these runtimes are powered by our open source MLServer technology.

There are really only two parts to the process of setting up and deploying the openai runtime. The first is the model-settings.json file:

{

"name": "data-extractor",

"implementation": "mlserver_llm_api.LLMRuntime",

"parameters": {

"uri": "./prompt-template.txt",

"extra": {

"provider_id": "openai",

"with_prompt_template": true,

"config": {

"model_id": "gpt-3.5-turbo",

"model_type": "chat.completions"

}

}

}

}

This specifies that we’re using the MLServer LLMRuntime as well as the the model_id and type. This file also tells the runtime that we’re using a prompt template and specifies its location using a URI. I used the following prompt:

You are a helpful data entry assistent whose responsibility is extracting data from a message sent by a user. The following is

such a message. Please extract the users details and return in a json dict with keys: "ApplicantIncome", "LoanAmount",

"Loan_Amount_Term", "Gender", "Married", "Dependents", "Education", "Self_Employed", "Property_Area"

Please ensure that "ApplicantIncome" is an integer greater than 0

Please ensure that "LoanAmount" is an integer greater than 0

Please ensure that "Loan_Amount_Term" is an integer corresponding to number of months

Please ensure that "Gender" is either "Male" or "Female"

Please ensure that "Married" is either "No" or "Yes"

Please ensure that "Dependents" is one of "0", "1", "2" or "3+"

Please ensure that "Education" is one of "Graduate" or "Not_Graduate"

Please ensure that "Self_Employed" is one of "Yes" or "No"

Please ensure that "Property_Area" is one of "Rural", "Semiurban" or "Urban"

Please only return JSON do not add any other text! If values are missing set them to a string: "none"

Loan Application: {text}

This template specifies a fixed prompt that will be included with any request sent to this model. In particular, the {text} string will be populated with the user input.

Deploying the data-extractor Model

Assuming you have seldon-core-v2 set up on a cluster somewhere (If not see this getting started guide) then deploying is as easy as adding a custom resource definition, specifying a storage URI and running a kubectl command. In our case I’ve placed the above model-settings.json file and prompt-template.txt file in a google bucket called data_extractor. Next we create amodels.yaml file and add the following custom resource definition to it:

apiVersion: mlops.seldon.io/v1alpha1

kind: Model

metadata:

name: data-extractor

spec:

storageUri: "gs://blogs/data-extraction/data_extractor"

requirements:

- openai

Notice here we specify the OpenAI runtime requirement. This tells Seldon Core to deploy to the correct resource, in this case the Enterprise Platform LLMRuntime.

We can deploy this model by running kubectl create -f models.yaml -n seldon-meshYou can then test the OpenAI model using the following:

import requests

import subprocess

def get_mesh_ip():

cmd = "kubectl get svc seldon-mesh -n seldon-mesh -o jsonpath='{.status.loadBalancer.ingress[0].ip}'"

return subprocess.check_output(cmd, shell=True).decode('utf-8')

loan_application = """

Hi,

I am looking for a loan to buy a new car.

I am a software engineer and I'm employed by Google. I am married and have a

wife and 2 kids. I have a house in central London and My income is 50,000.

I would like a loan amount of 100,000 and I am looking for a term of 10 years. I'm a graduate.

Mr John Doe

"""

inference_request = {

"inputs": [

{

"name": "text",

"shape": [1, 1],

"datatype": "BYTES",

"data": [loan_application]

}

]

}

endpoint = f"http://{get_mesh_ip()}/v2/models/data-extraction/infer"

response = requests.post(endpoint, json=inference_request)

response.json()

Running the above gives us:

{'ApplicantIncome': 50000, 'LoanAmount': 100000, 'Loan_Amount_Term': 120, 'Gender': 'Male', 'Married': 'Yes', 'Dependents': '2', 'Education': 'Graduate', 'Self_Employed': 'No', 'Property_Area': 'Urban'}

These feature values can then be passed on to another model that will perform a prediction on them. In our case, it’ll be a random forest classifier that will predict whether or not to accept the application.

Creating the Pipeline

The pipeline we want to deploy will require a couple more components which we will briefly touch on. The first is the actual loan application predictor model that’s an sklearn RandomForestClassifier and is deployed using MLServer’s Open Source sklearn runtime. For an example of how this works see the following guide.

The second is a custom preprocessing MLServer model which we use to convert the dictionary output of the OpenAI data extractor into the format required for the sklearn runtime. See here for how to create custom MLServer runtimes.

These two components are deployed in the same way as the data extractor example above. We place them in a google bucket and use kubectl to load them onto the cluster. Once all these pieces are in place all that’s left is to write the pipeline.yaml and deploy! This is the required configuration:

apiVersion: mlops.seldon.io/v1alpha1

kind: Pipeline

metadata:

name: data-extraction

spec:

steps:

- name: data-extractor

- name: preprocessor

inputs:

- data-extractor

- name: loan-prediction-model

inputs:

- preprocessor

output:

steps:

- loan-prediction-model

- data-extractor

As you can see we’ve defined a pipeline called data-extraction. This pipeline runs in 3 steps:

- The data extractor takes the user written text in the loan application, and extracts the features needed for the sklearn model to perform its prediction.

- The output of the data-extractor is passed to the preprocessor which formats them for the loan-prediction-model.

- The output of the preprocessor is passed to the loan-prediction-model that makes a prediction: 1 for loan application granted and 0 for declined.

- Finally the loan-prediction-model output along with the data-extractor outputs are returned from the pipeline.

This is a simple example of the kind of pipelines that can be deployed with Seldon Core v2. For a more detailed guide see here.

To deploy the pipeline we use kubectl again: kubectl apply -f pipeline.yaml -n seldon-mesh and we should now have a running pipeline in our cluster that chains these three models together.

We can test using:

inference_request = {

"inputs": [

{

"name": "text",

"shape": [1, 1],

"datatype": "BYTES",

"data": [loan_application]

}

]

}

headers = {

"Content-Type": "application/json",

"seldon-model": "data-extraction.pipeline",

}

endpoint = f"http://{get_mesh_ip()}/v2/models/data-extraction/infer"

response = requests.post(endpoint, headers=headers, json=inference_request)

response.json()

Running the above will return the extracted values along with a 1 or 0 that tells you if the loan is accepted or not.

The above pipeline is a very simple example of a deployment solution that uses large language models for data extraction. However, this idea can be pushed further. You might want to deploy a conversational chat bot that queries the user for the relevant information and extracted the data from the users responses. This would be in contrast to the above where the user requires to provide all the information at once. Seldon has and is building a set of features that will enable you to easily build and deploy these kinds of apps so that you can focus on the functionality of the application rather than deployment concerns.

Conclusion

As well as automated data extraction, language models provide us with the ability to tackle many problems with ease, spanning a wide array of natural language processing tasks. Sentiment analysis, for instance, leverages the contextual understanding of LLMs to discern the emotional tone embedded in text, enabling applications to gauge user opinions and attitudes. Question answering becomes more intuitive as these models excel in comprehending complex queries and generating coherent responses. Summarisation tasks benefit from the concise and coherent nature of language models, allowing for the extraction of key information from extensive documents. Moreover, language models play a pivotal role in machine translation by capturing nuances and idiomatic expressions, contributing to more accurate and contextually appropriate translations. Whether it’s text completion, text generation, or even code generation, the versatility of language models extends their utility to a myriad of practical applications, showcasing their prowess in addressing diverse challenges in the realm of natural language understanding and generation.

In this blog we’ve seen how to leverage the Enterprise Platform to automate the extraction of pertinent information from a loan application. As well as this we’ve deployed a pipeline, powered by Seldon Core v2, that showcased the fluid integration of the data extraction step with downstream tasks, such as a loan application classifier implemented using a Random Forest model. The remarkable adaptability of LLMs, coupled with the robust deployment infrastructure provided by Seldon Core, empowers practitioners to effortlessly craft sophisticated natural language pipelines. As the field of NLP and machine learning continues to evolve, this amalgamation of powerful language models and streamlined deployment frameworks opens up avenues for the creation of intelligent and efficient systems across various domains, from finance to document analysis and beyond.