+Module

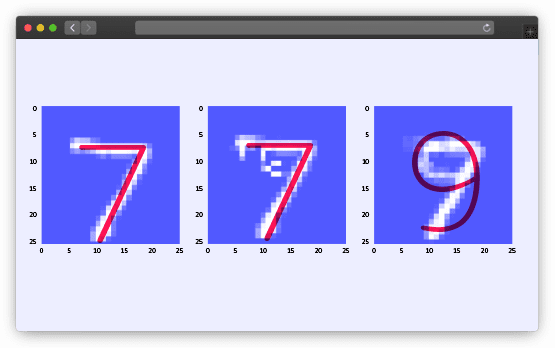

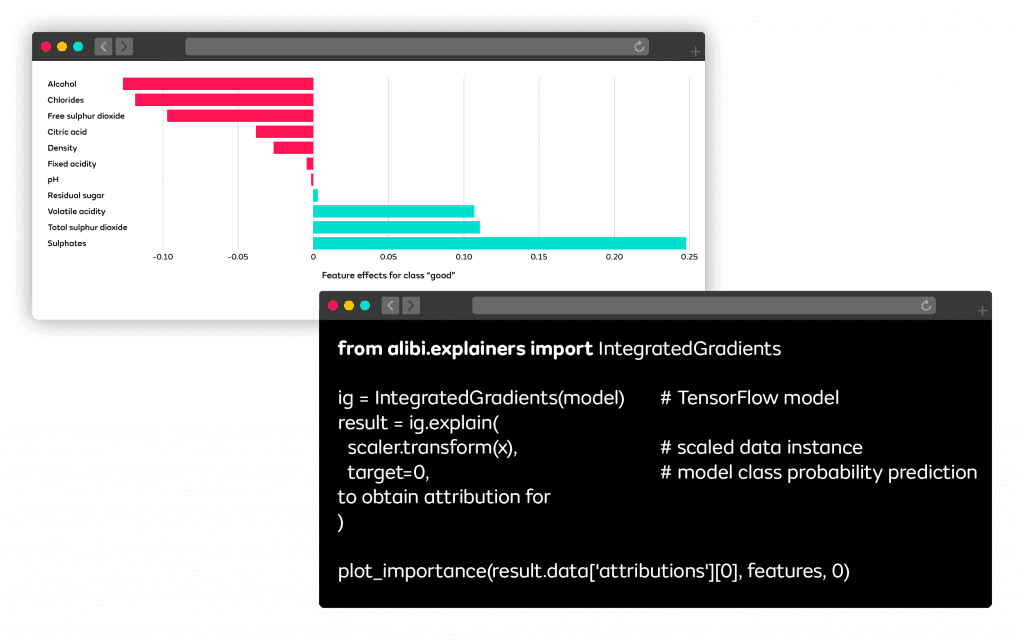

Added transparency and control over model decisions, fostering trust and understanding through clear explanations for predictions.

Ensure Model Performance

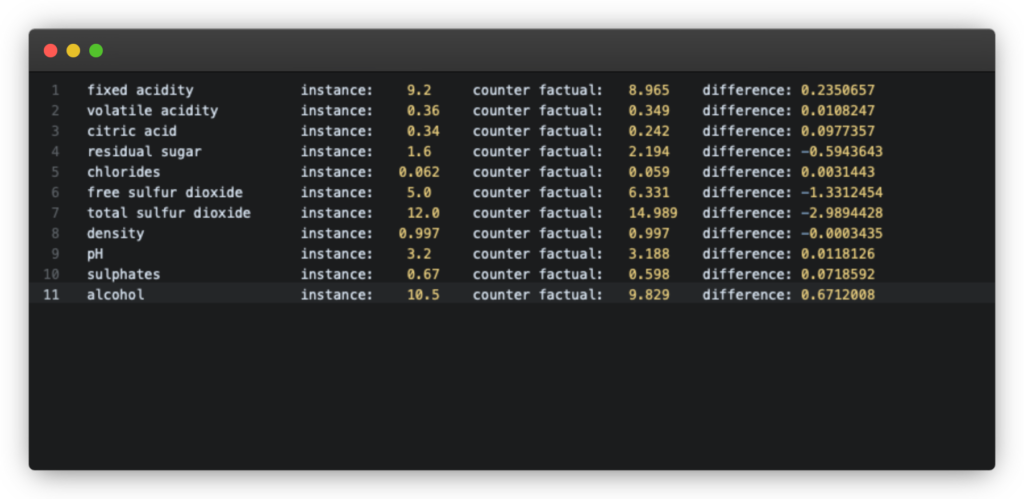

Ensure accuracy by monitoring alteration

When your data changes so can your models predictions.

Feature Selection

Strengthen intuition for feature selection

Gain insights into how features influence model performance.

Create Consistent Predictions

Derive a set of features and attributes

Return the score that indicates the presence of features & instances that trick the model outcome.

+Module

Make your machine learning efforts more reliable and build confidence in your deployed models with tools like enhanced outlier, adversarial and drift detection.

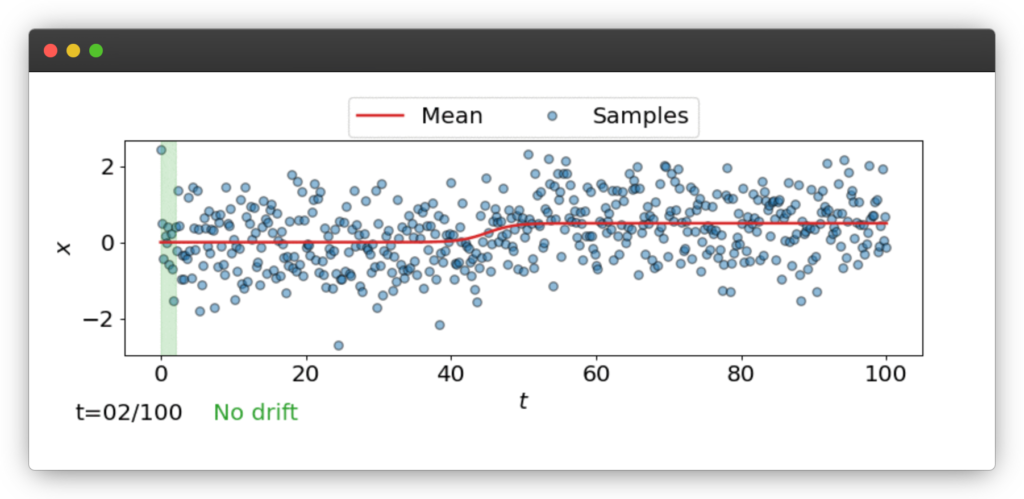

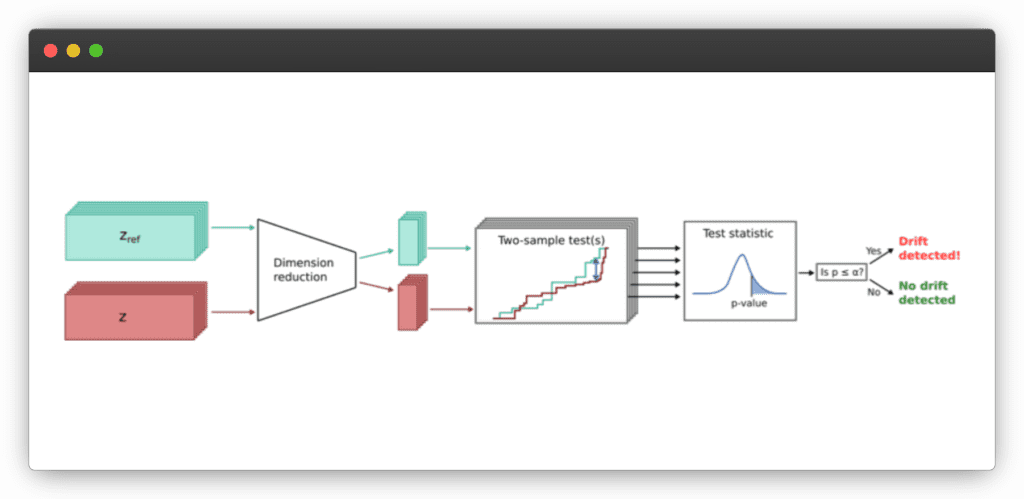

Advanced Drift Detection

Take control of model performance

Notice changes in data dynamics & define whether detected drift will cause a decrease in model performance.

Outlier Detection

Discover critical anomalies in input and output data

Gain insights into how features influence model performance.

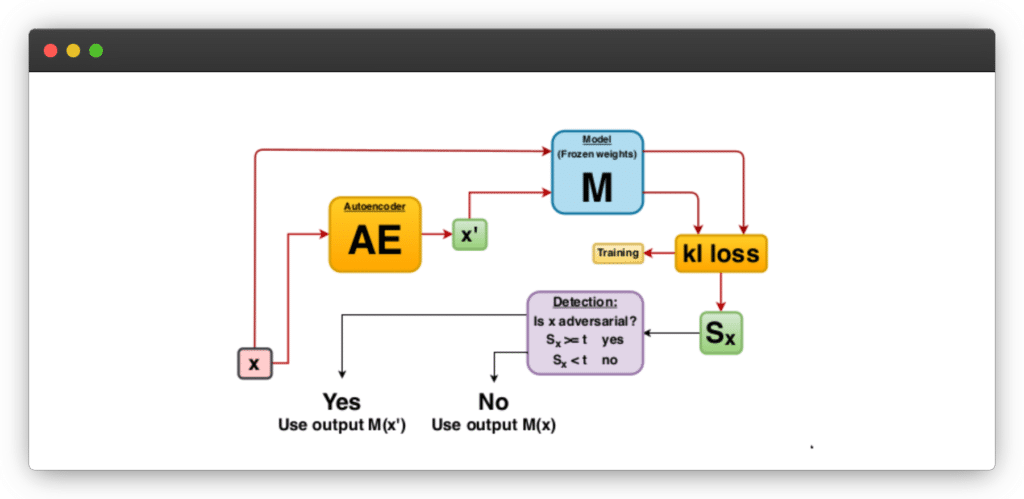

Adversarial Detection

Improve model security and robustness

Return the score that indicates the presence of features & instances that trick the model outcome.

Download Your Copy

By submitting this form, I agree to the Terms of Use and acknowledge that my information will be used in accordance with the Privacy Policy.

GUIDE

The Essential Guide to ML System Monitoring and Drift Detection

Learn when and how to monitor ML systems, detect data drift, and overall best practices—plus insights from Seldon customers on future challenges.

Latest Articles

Stay Ahead in MLOps with our

Monthly Newsletter!

Join over 25,000 MLOps professionals with Seldon’s MLOps Monthly Newsletter—your source for industry insights, practical tips, and cutting-edge innovations to keep you informed and inspired. You can opt out anytime with just one click.

✅ Thank you! Your email has been submitted.